Spatial Audio

What is "Spatial Audio"?

"Spatial Audio" means different things to different people. In the general sense, Ambisonics is a Spatial Audio technology, as are a range of other 3D audio techniques. However, the term now seems most associated with "object-based" audio formats like Dolby Atmos or Apple Spatial Audio, so that's generally what we mean when we use the term these days.

"Object-Based" Audio Formats

There has been a fair amount of hype around object-based audio formats in recent years. Here, "objects" are mono audio signals with additional data, often referred to as "metadata", which indicates where the audio should be placed spatially.

These objects are generally used in combination with a "channel-based" bed, like 5.1 or 7.1.2. This bed uses essentially the same "surround" technology that has been used in cinemas and home surround sound systems for decades, sometimes with a few more channels. For instance, 7.1.2 adds two ceiling speakers to 7.1. This channel-based bed is generally used for background, with objects used for the foreground.

The main advantage of carrying the objects and their spatial data all the way to the final playback system is that, as with ambisonics, decisions about how the audio will be rendered do not have to be made until the final speaker layout is known. This typically works better than trying to convert between channel-based formats. For instance, a 5.1 system receiving a 7.1.2 channel-based bed needs to make difficult decisions about what to do with the audio in the two ceiling speaker channels, whereas a mono object with spatial information can be placed somewhere sensible on either a 5.1 or 7.1.2 system quite easily.

There are other advantages to objects, such as the ability to choose to include them in a scene or not, allowing a degree of basic interactivity; audio commentaries can be switched on or off without requiring a whole separate mix.

Limitations

Real acoustics are complicated, and modelling complex scenes using simple objects is often approximate at best. In particular, reverberation can involve massive numbers of reflections which cannot be modelled easily with objects. So, complex scenes and reverberation are typically carried in the background channel-based bed.

This means that actually a substantial part of some scenes is still carried as a channel-based bed, which might or might not match the speaker layout it is played on.

Another common limitation of Spatial Audio systems is the absence of lower sounds. Unlike with ambisonics, sounds can only be presented at head height or above, but not below.

Spatial Audio Systems and Ambisonics

Like objects, ambisonics also allows rendering decisions to be deferred until the final speaker layout is known, although it does this by carrying a mathematical decomposition of the acoustic scene rather than by building the scene up using objects.

Some audio formats like MPEG-H or ADM can actually carry three types of audio stream which they label as "channel-based", "object-based" or "scene-based" audio. Here, "scene-based" typically does mean ambisonics! However, ambisonics does not really need to be seen as a different category of audio.

The Rapture3D Approach

The Rapture3D game engines approach things differently. Rapture3D uses mono "sources", which behave like mono objects with spatial data as above.

But when Rapture3D refers to "beds", these might be 5.1, or 7.1.2, or ambisonic, and there might be more than one. There is no great distinction between channel-based and scene-based audio here. Rapture3D Universal can also move these beds around in space, so beds have all the essential features of objects too, albeit multichannel ones, and can also be used freely to create interactive content in much the same way.

Reverb can be added dynamically too, depending on the actual beds and sources playing at any particular time.

Presenting Ambisonic Audio on Spatial Audio Systems

Unfortunately, Spatial Audio systems like Dolby Atmos and Apple Spatial Audio currently do not support ambisonics directly. What then are we to do with a complex scene mixed or captured with ambisonics?

One approach is to decode the audio to a bed such as 7.1.2, and for simple scenes, this might be acceptable. However, as discussed above, the 7.1.2 will not necessarily be played on a 7.1.2 system, so it will often need to be converted for playback, which will typically result in degraded audio. These same issues also apply to material mixed directly in 7.1.2 without ambisonics.

Another issue is that the speaker angles used in beds like 7.1.2 have quite wide tolerances, so for instance a "front left" speaker might be at a variety of different angles, perhaps 30 or 45 degrees. This means that the final angles that sounds are presented at can be quite varied, particularly if you are used to ambisonic precision.

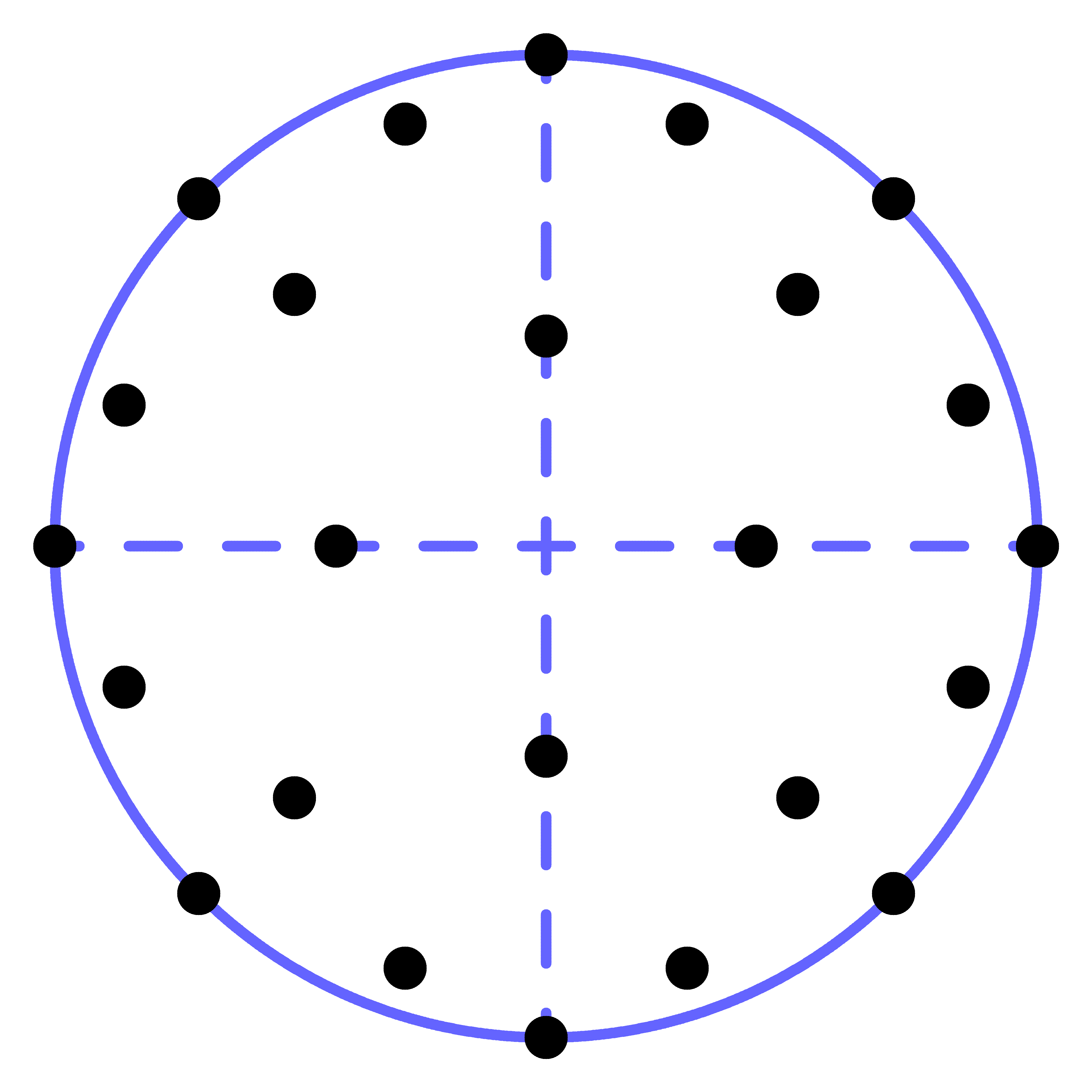

A solution is to use objects only, as virtual speakers in precisely specified locations. This is the approach we have taken in our O3A Spatial Audio plugins, which use a regular "dome" distribution of virtual speakers over the upper hemisphere. This gives decent results, although generally not as good as can be achieved using a good ambisonic decoder directly.

Be careful not to use too many virtual speakers though. If too many objects are used in a Spatial Audio mix, it is possible that a downstream system will fold some of those objects into a channel-based bed anyway to save bandwidth for distribution.